Teacher: Gabriel TURINICI

Content

- classical portfolio mangement under historical probability measure: optimal portfolio, arbitrage, APT, beta

- Financial derivatives valuation and risk neutral probability measure

- Volatility trading

- Portfolio insurance: stop-loss, options, CPPI, Constant-Mix

- Hidden or exotic options: EFT, shorts

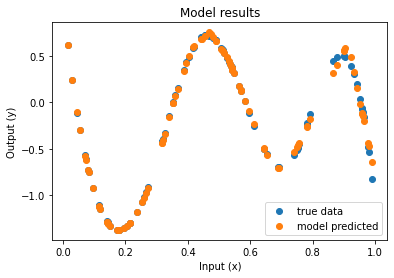

- Deep learning and portfolio strategies

Documents

NOTA BENE: All documents are copyrighted, cannot be copied, printed or ditributed in any way without prior WRITTEN consent from the author

Historical note: 2019/21 course name: « Approches déterministes et stochastiques pour la valuation d’options » + .